As more and more companies throw their weight behind designing autonomous cars, patterns begin to form about what features move the industry forward, and which don’t.

As we approach a self-driving future, the number of sensors in vehicles will increase tenfold, but which types of sensor will provide the most value?

Vision Systems – Sensors

Before the DARPA Grand Challenge(s) and Google caused the development of autonomous vehicles to go viral, the sensors for ADAS were fairly simple, sampling rates low and ECU processing well within the capabilities of a fairly weedy microcontroller. All that changed, when it was realised that replacing human sensory input (eyes) and image processing (brain) was going to be a mammoth task. Up to that point, the most sophisticated system for monitoring what was happening outside the car was parking radar, based on ultrasonic transducers! The search is on to develop a vision sensor as good as the human eye. Or so it would seem. In fact, when you lay out the specification for an artificial eye able to provide the quality of data necessary for driver automation, the human eye seems rubbish by comparison. Consider this loose specification for our vision system:

• Must provide 360° horizontal coverage around the vehicle

• Must resolve objects in 3D very close to, and far from, the vehicle

• Must resolve/identify multiple static/moving objects up to maximum range

• Must do all the above in all lighting and weather conditions

• Finally, provide all this data in ‘real-time’.

Now see how well human vision meets these requirements:

• Two eyes provide up to 200° horizontal field of view (FOV) with eye movement

• 3D (binocular) vision exists for 130° of the FOV

• Only central 45 to 50° FOV is ‘high-resolution’ with maximum movement & colour perception

• Outside the central zone, perception falls off rapidly – ‘peripheral vision’

• Automatic iris provides good performance in varying lighting conditions

• Equivalent video ‘frame rate’ good in central zone, bad at limits of peripheral vision

• Each eye has a ‘blind-spot’ where the optic nerve joins the retina

• All the above ignore age, disease and injury related defects of course

The upshot of this is that the human driver’s eyes only provide acceptable vision over a narrow zone looking straight ahead. And then there’s the blind spot. Given the limitations of eyeball ‘wetware’ why don’t we see life as if through a narrow porthole with part of the image missing? The answer lies with the brain: it interpolates or ‘fills in the blanks’ with clever guesswork. But it’s easily fooled.

1. Artificial Eyes – Camera

The camera is an obvious candidate as an artificial eye which in many ways offers superior performance to its natural counterpart:

• Maintains high-resolution in pixels and colour across the full width of its field of view

• Maintains constant ‘frame-rate’ across the field of view

• Two cameras provide stereoscopic 3D vision

• It’s a ‘passive’ system, so no co-existence problems with other vehicles’ transmissions

• Maintains performance over time – doesn’t suffer from hay-fever or macular degeneration

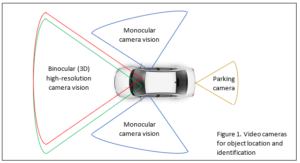

Fig.1 above shows a system suitable for ADAS, providing such functions as forward collision avoidance, lane departure warnings, pedestrian detection, parking assistance and adaptive cruise control. The lack of 360° coverage makes the design unsuitable for Level 4 and 5 autodriving however. Full around-the-car perception is possible, but that takes at least six cameras and an enormous amount of digital processing. Nevertheless, it matches or exceeds the capabilities of human eyes in this situation. Three areas of concern remain though:

• Performance in poor lighting conditions, i.e. at night

• Performance in bad weather. What happens when the lenses get coated with dirt or ice?

• Expensive, ruggedized cameras needed, able to work over a wide temperature range

It’s not just the ability to see traffic ahead that’s impaired; lane markings may be obscured by water and snow, rendering any lane departure warning system unreliable.

Adapted from eetimes and rs-online