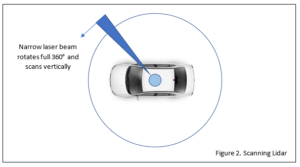

2. Artificial Eyes – Lidar

Light Detection and Ranging or Lidar operates on the same principle as conventional microwave radar. Pulses of laser light are reflected off an object back to a detector and the time-of-flight is measured. The beam is very narrow and the scanning rate sufficiently fast, that accurate 3D representations of the environment around the car can be built up in ‘real-time’. Lidar scores over the camera in a number of ways:

• Full 360° 3D coverage is available with a single unit

• Unaffected by light level and has better poor weather performance

• Better distance estimation

• Longer range

• Lidar sensor data requires much less processing than camera video

A Lidar scanner mounted on the roof of a car (see Fig.2 above) can, in theory, provide nearly all the information needed for advanced ADAS or autodriving capabilities. The first Google prototypes sported the characteristic dome and some car manufacturers believe that safe operation can be achieved with Lidar alone. But there are downsides:

• Resolution is poorer than the camera

• It can’t ‘see’ 2D road markings, so no good for lane departure warnings

• For the same reason, it can’t ‘read’ road signs

• The prominent electro-mechanical hardware has up to now been very expensive

• Laser output power is limited as light wavelengths of 600-1000nm can cause eye damage to other road users. Future units may use the less damaging 1550nm wavelength

The cost of Lidar scanners is coming down as they go solid-state, but this has meant a drop in resolution due to fewer channels being used (perhaps as few as 8 instead of 64). Some are designed to be distributed around the car, each providing partial coverage, and avoiding unsightly bulges on the roof.

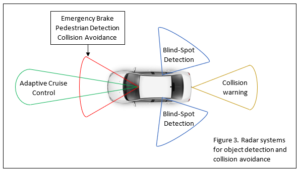

3. Artificial Eyes – Radar

Microwave radar can be used for object detection from short (10m) to long range (100m). It can handle functions such as adaptive cruise control, forward collision avoidance, blind-spot detection, parking assistance and pre-crash alerts (see Fig.3 below). Two frequency bands are in common use: 24-29GHz for short range and 76-77GHz for long range work. The lower band has power restrictions and limited bandwidth because it’s shared with other users. The higher band (which may soon be extended to 81GHz) has much better bandwidth and higher power levels may be used. Higher bandwidth translates to better resolution for both short and long range working and may soon result in the phasing out of 24-29GHz for radar. It’s available in two forms: Simple Pulse Time-of-Flight and Frequency-Modulated Continuous-Wave (FMCW). FMCW radar has a massive amount of redundancy in the transmitted signal enabling the receiver to operate in conditions of appalling RF noise. This means that you can have either greatly extended range in benign conditions, or tolerate massive interference at short range. Not good enough for autodriving on its own, but it does have some plus points:

• Unaffected by light level and has good poor-weather performance

• It’s been around for a long time, so well-developed automotive hardware on the market

Radar has its uses, but limitations confine it to basic ADAS:

• Radar doesn’t have the resolution for object identification, only detection

• 24-29GHz band radar unable to differentiate between multiple targets reliably

• FMCW radar requires complex signal processing in the receiver

Conclusion

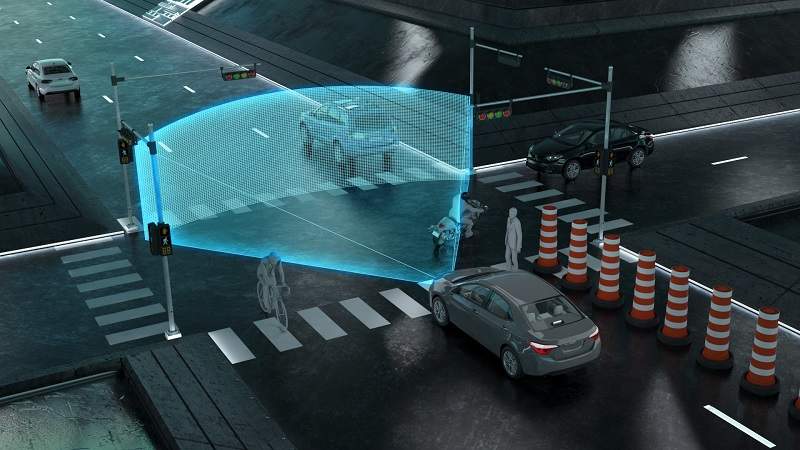

The obvious conclusion is that cameras, Lidar, Radar and even ultrasonics (Sonar) will all be needed to achieve safe Level 4 and 5 autodriving, at least for the short term. Combining the outputs of all these sensor systems into a form the actual driving software can use will be a major challenge of sensor fusion. No doubt artificial intelligence in the form of Deep Neural Networks will be used extensively, not only for image processing and object identification but as a reservoir of ‘driving experience’ for decision making.

Clearly all three sensors bring advantages to automakers building the next wave of connected cars but after breaking down the pros and cons of each option, we may infer some predictions.

Due to higher cost, lidar might remain a premium option for the time being as OEMs figure out the cost structure of their self-driving cars. The move toward level 4 fully autonomous cars (SAE, 2014) will require essential safety-proven technology but the journey through levels 2-3 will find lidar lagging behind in take rate.

Radar is a proven technology increasingly becoming more efficient for the autonomous car. The new RFCMOS technology recently introduced to the market will allow smaller, lower power, efficient sensors that fit right into the OEM cost reduction strategy. This will also make radar more complementary to cameras as the “dynamic duo”.

Cameras are the cheapest sensor of the three and will likely remain the volume leader the near term. Their future will be strongly dependent on the development of the software algorithms controlling the self-driving car and how it can process the massive amount of data generated.

Adapted from eetimes and rs-online