Artificial Intelligence (AI) has taken the automotive industry by storm to drive the development of level-4 and level-5 autonomous vehicles. Why do you think AI has become so popular now, though it has been around since the 1950s? Simply put, the reason behind this explosion of AI is the humongous amount of data that we have available today. With the help of connected devices and services, we are able to collect data in every industry, thus fueling the AI revolution. While efforts are being made to rapidly improve sensors and cameras to generate data with autonomous vehicles, Nvidia unveiled its first AI computer in October 2017 to enable deep learning, computer vision and parallel computing algorithms. AI has become an essential component of automated drive technology and it is important to know how it works in autonomous and connected vehicles.

What is Artificial Intelligence?

John McCarthy, a computer scientist, coined the term ‘Artificial Intelligence’ in 1955. AI is defined as the ability of a computer program or machine to think, learn and make decisions. In general use, the term means a machine which mimics human cognition. With AI, we are getting computer programs and machines to do what humans do.

We are feeding these programs and machines with a massive amount of data that is analyzed and processed to ultimately think logically and perform human actions. The process of automating repetitive human tasks is just the tip of the AI iceberg, medical diagnostics equipment and autonomous cars have implemented AI with the objective of saving human lives.

The Growth of AI in Automotive

The automotive AI market reported that it is expected to be valued at $783 million in 2017 and expected to reach close to $11k million by 2025, at a CAGR of about 38.5%. IHS Markit predicted that the installation rate of AI-based systems of new vehicles would rise by 109% in 2025, compared to the adoption rate of 8% in 2015. AI-based systems will become a standard in new vehicles especially in these two categories:

1. Infotainment human-machine interface, including speech recognition and gesture recognition, eye tracking and driver monitoring, virtual assistance and natural language interfaces.

2. Advanced Driver Assistance Systems (ADAS) and autonomous vehicles, including camera-based machine vision systems, radar-based detection units, driver condition evaluation and sensor fusion engine control units (ECUs).

Deep learning technology, which is a technique for implementing machine learning (an approach to achieve AI), is expected to be the largest and the fastest-growing technology in the automotive AI market. It is currently being used in voice recognition, voice search, recommendation engines, sentiment analysis, image recognition and motion detection in autonomous vehicles.

How Does AI Work in Autonomous Vehicles?

AI has become a popular buzz word these days, but how does it actually work in autonomous vehicles?

Let us first look at the human perspective of driving a car with the use of sensory functions such as vision and sound to watch the road and the other cars on the road. When we stop at a red light or wait for a pedestrian to cross the road, we are using our memory to make these quick decisions. The years of driving experience habituate us to look for the little things that we encounter often on the roads — it could be a better route to the office or just a big bump in the road.

We are building autonomous vehicles that drive themselves, but we want them to drive like human drivers do. That means we need to provide these vehicles with the sensory functions, cognitive functions (memory, logical thinking, decision-making and learning) and executive capabilities that humans use to drive vehicles. The automotive industry is continuously evolving to achieve exactly this over the last few years.

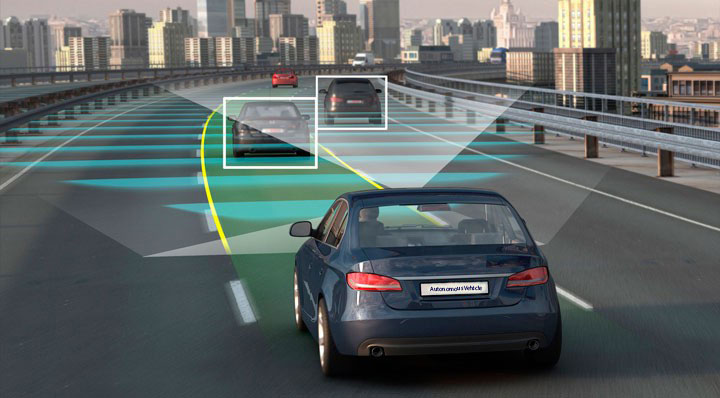

According to Gartner, by 2020, 250 million cars will be connected with each other and the infrastructure around them through various V2X (vehicle-to-everything communication) systems. As the amount of information being fed into IVI (in-vehicle infotainment: it is a collection of hardware and software in automobiles that provides audio or video entertainment) units or telematics systems grows, vehicles will be able to capture and share not only internal system status and location data but also the changes in its surroundings, all in real time. Autonomous vehicles are being fitted with cameras, sensors and communication systems to enable the vehicle to generate massive amounts of data which, when applied with AI, enables the vehicle to see, hear, think and make decisions just like human drivers do.

AI Perception Action Cycle in Autonomous Vehicles

A repetitive loop, called Perception Action Cycle, is created when the autonomous vehicle generates data from its surrounding environment and feeds it into the intelligent agent, who in turn makes decisions and enables the autonomous vehicle to perform specific actions in that same environment.

Let us break this process down into three main components:

Component 1: In-Vehicle Data Collection & Communication Systems

Autonomous vehicles are fitted with numerous sensors, radars and cameras to generate massive amounts of environmental data. All of these form the Digital Sensorium, through which the autonomous vehicle can see, hear and feel the road, road infrastructure, other vehicles and every other object on/near the road, just like a human driver would pay attention to the road while driving. This data is then processed with super-computers and data communication systems are used to securely communicate valuable information (input) to the autonomous driving cloud platform. The autonomous vehicle first communicates the driving environment and/or the particular driving situation to the Autonomous Driving Platform.

Component 2: Autonomous Driving Platform (Cloud)

The Autonomous Driving Platform which is in the cloud contains an intelligent agent which makes use of AI algorithms to make meaningful decisions.

It acts as the control policy or the brain of the autonomous vehicle. This intelligent agent is also connected to a database which acts as a memory where past driving experiences are stored. This data along with the real-time input coming in through the autonomous vehicle and the immediate environment around it helps the intelligent agent make accurate driving decisions. The autonomous vehicle now knows exactly what to do in this driving environment and/or particular driving situation.

Component 3: AI-Based Functions in Autonomous Vehicles

Based on the decisions made by the intelligent agent, the autonomous vehicle is able to detect objects on the road, maneuver through the traffic without human intervention and gets to the destination safely. Autonomous vehicles are also being equipped with AI-based functional systems such as voice and speech recognition, gesture controls, eye tracking and other driving monitoring systems, virtual assistance, mapping and safety systems to name a few. These functions are also carried out based on the decisions made by the intelligent agent in the Autonomous Driving Platform. These systems have been created to give customers a great user-experience and keep them safe on the roads. The driving experiences generated from every ride is recorded and stored in the database to help the intelligent agent make much more accurate decisions in the future.

This data loop, called Perception Action Cycle, takes place repetitively. The more the number of Perception Action Cycles take place, that much more intelligent the intelligent agent becomes, resulting in a higher accuracy of making decisions, especially in complex driving situations. More the number of connected vehicles, more the number of driving experiences are recorded, enabling the intelligent agent to make decisions based on data generated by multiple autonomous vehicles. This means that not every autonomous vehicle has to go through a complex driving situation before it can actually understand it.

Artificial intelligence, especially neural networks and deep learning, have become an absolute necessity to make autonomous vehicles function properly and safely. AI is leading the way for the launch of Level 5 autonomous vehicles, where there will be no need for a steering wheel, accelerator or brakes.