Video analytics for buses and trains help detect security concerns and gain business insights in real-time using features such as people counting, behavior analysis, and people density analysis. This is the first of a series of articles that explores the latest developments in public transport video analytics and the challenges to overcome as we go forward.

In February this year, an unidentified attacker stabbed two people to death and wounded two others in the New York subway on a Friday night. The police tried to use security cameras to identify the suspect and plans to deploy an additional 500 personnel on subways to strengthen security.

Such incidences are not uncommon, but our response to them continues to remain the same. Do we really have the resources to deploy additional personnel to each public transport system that requires better protection?

The case for more video analytics in transport

Onboard cameras have been useful for forensic purposes for quite some time now. But today, we have video analytics technology that can help us detect the possibility of an incident even before it happens. The continuous increase in the performance of the cameras has created the basis for implementing more complex image analysis tools directly onboard without necessarily having to rely on an additional server.

Whereas in the beginning, we were talking about pure motion detection, today we can offer detailed information about the behavior or the appearance of persons or vehicles, among other things. Over time, the camera becomes a Big Data IoT device. Video analytics as a ‘modular’ software sensor actively contributes to this development, especially with the progressive adaptation of artificial intelligence and deep learning in particular.

Functions and features on onboard analytics

Of course, there are legacy functionalities regarding passengers such as people counting, traveler density evaluation, etc., which are “real-time” functionalities. But there are also advanced uses for simplifying the research of specific events/items from large-sized data in video recording. Then there are other technical applications of video analytics, although in smaller quantities, on the forward-facing cameras installed on trains. They can be used, e.g., for the “near-miss” incident detection. Using dedicated algorithms, it is possible to easily search for some near-miss incident that has happened in the recordings.

All these applications have left the plain old “video detection” simply based on motion detection to the use of artificial intelligence. In such an environment, it is used for two main different use cases, using deep learning.

1. Eliminating all the “unwanted noise” in pictures or conditions so that only the content of interest could be used for the target purpose

2. Classifying the target objects with attributes, e.g., a person/face, or glasses/no glasses, top wear color, bottom wear color, luggage (yes/no). This information is saved as metadata in the videorecorders.

Aside from the onboard uses, there are also the video analytics applications on the ground/stations. They are the ‘must have’ of interest from security and safety point of view, for both real-time situation evaluation and further investigation purpose. One of the most important functions in such new uses is the object classification.

Challenges to overcome

There are more and more cameras onboard to offer passengers a smooth, safe and secure journey, but there are also challenges limiting their functions.

Integration compatibility

It is essential to ensure that the available hardware resources are compatible with the requirements of the software. The advantages of the edge approach become particularly clear when both components harmonize. There is no value addition if you have the most reliable software but is not optimized for operation on edge.

In the daily business, we also see it as our task to enable our partners to tailor the best solutions for their customers. With the constantly growing offers on the market, it can be quite difficult to make the right choice. This often includes the assumption that software with Deep Learning or AI written on it is a kind of all-purpose weapon and works perfectly in all situations without further intervention. The project conditions must always be taken into account. The management of customer’s expectations and requirements is key.

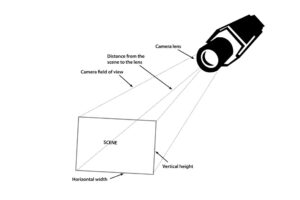

Limited field of view

Video analytics’ biggest problem inside onboard cameras is the limited field of view. Buses and trains have a very low ceiling for camera installation, which means that the cameras can usually not cover a large part of the train or the bus.

There is a trend to employ 360-degree cameras, but a lot of analytics cannot work with 360-degree angle footage. I would say that’s really the biggest challenge. One way to overcome it is using 360-degree cameras and ensuring analytic software is compatible with them. Or you can have more cameras in place capturing footage from different angles.

You can also install cameras that are meant for specific purposes. For instance, regular onboard cameras are often not the best choice for people counting purposes. You can consider LiDAR solutions here, which is something that is becoming increasingly common.

Challenge of a moving vehicle

A final point to add is the nature of the environment where cameras are installed. Onboard video analytics also faces many technical challenges, mainly due to the perpetually moving background through windows as the train moves (daylight change, tunnels, shadows, etc.), as well as the crowd density, e.g., in city trains or metro.

Conclusion

Onboard video surveillance is an integral part of public transport in most developed economies. However, these no longer need to remain passive recorders for forensic evidence. By leveraging the power of video analytics, operators can offer proactive security to passengers.

In the next part of this series, we explore the increasing role of business intelligence in onboard video analytics. Keep reading!

Adapted from a&s Magazine